Making a Compiler

So you want to make your own compiler, from scratch?

That won't be easy. Some compilers are comparable in complexity to entire simple operating systems.

Still, it can be a fun and/or educational project. Here are some things you should know before you get started:

Pros and Cons

Why write a compiler instead of using an existing one?

Pros:

- A compiler for the language you want and the target you want might simply does not exist.

- Educational, you get a better idea of how the kernel, compiler, and the run-time libraries work together, and what it takes to implement a language.

- Speaking of, you can implement your own new programming language, right then and there! How cool is that?

- You can do just about anything with the program being compiled:

- Add debugging data in your favorite format

- Add profiling aids

- Re-write and re-arrange parts of the program

- Optimize for something specific

- Throw it out and replace it with another program

- The compiled programs would fit your machine or OS like a glove, even if it's a new OS you're developing.

Cons:

- Complete understanding of the language required. Always keep the official language spec at hand.

- Understanding of assembly specific to your target is also required.

- Most of the notes on OSDev motivation apply to compilers as well.

- It will be tricky to fully comply with the language spec.

- You would have to be really, really good at this if you wish to compete with GCC in terms of size/speed optimization of the compiled code.

Terminology

Well, if you're still up to it, let's now look at how compilers work:

Terminology:

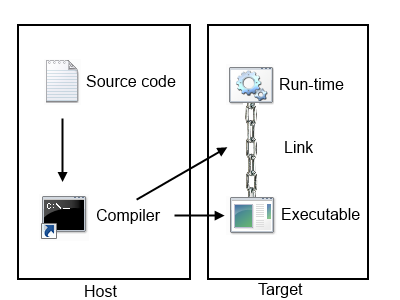

- The "Host" is the environment where the compiler itself runs.

- The "Target" is where the compiled program is meant to run.

- The "run-time" is a set resources (libraries, processes, etc) that exist on the Target. Two machines with identical hardware that differ in availability of run-time resources are sometimes thought of as different targets, as a program might run on one but not the other.

- The "executable" is a file containing information necessary for the Target to launch the program. It can be a flat binary, but is usually more elaborate, containing for example linking and re-location information.

- The "link" signifies that the program knows how to interface with the run-time, and relies on the run-time for some of it's functionality. It is created by the linker, and does not exist in free-standing programs (i.e. OS kernel).

If the host and the target are the same the product is a "native executable". This is what you see 99% of the time. If the compiler is capable of producing an executable for a target different from the host, it's a "cross-compiler". If the compiler is capable of compiling itself, it's "self-hosting".

Internals

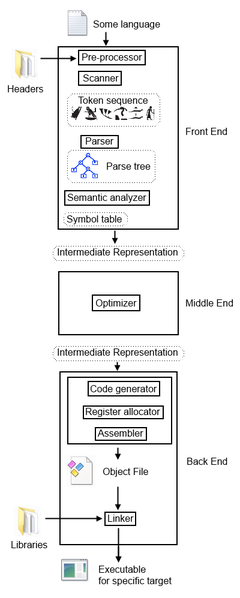

Compilers also have a peculiar internal structure. You do not have to replicate the one described here exactly, but it's a good road map for now. Generally, the task of compiling a program is subdivided into several individual tasks:

- The Front End takes the source code for some specific language, and outputs a universal "intermediate representation" (IR).

- The Middle End (optional) takes the intermediate representation, changes it in some way, and outputs it back.

- The Back End takes the IR and outputs an executable for a specific target.

The idea for this division is that by having a collection of different front-ends, and different back ends, you can compile a program in any language, to any target, because the IR is the same for all of them.

Front End

- First of all, the front end usually includes some sort of user interface, so that files and options can be accepted. Then, there are several stages of processing.

- For C-like languages, a pre-processor copies and pastes the code from header files, removes comments performs macro expansion and does other relatively simple tasks.

- Then, a scanner (or tokenizer) breaks up the source file into a series of tokens, representing basic words of a language (i.e. identifiers, number and string constants, keywords like "if" and "while", punctuation marks and semicolons)

- Then, a parser reads the stream of tokens and constructs a parse tree, a tree-like structure that represents the structure of the source code. In terms of language-making, each source code file is a "sentence" of the language, and every sentence, if it is valid, follows a specific syntax.

- Next, a semantic analyzer walks the parse tree and determines the specific meaning of each part of that "sentence". For instance, if a statement is a declaration, it adds an entry to a symbol table, and if it is an expression involving a variable, it substitutes an address of the known variable from the symbol table for the variable inside the expression.

- Finally, the Front End generates an Intermediate Representation, which captures all the information specific to the source code of the program. A good IR says everything that the source says and more, and also captures additional requirements that the language places on the program. It can be in the form of a parse tree, abstract syntax tree, three address code or something more exotic.

It's worth noting that this break-up is purely for convenience of the compiler developer. Most types of parsers can easily read a stream of characters as opposed to a stream of tokens, and so do not need a separate scanner. Some very powerful classes of parsers can even determine the semantic meaning of statements before they're even done reading, and so do not need a semantic analyzer. They might be more difficult to understand and work with, though.

Middle End

Applies a whole bunch of algorithms meant to try and make the program more efficient. I'm not really familiar with this part, so I won't go into details. Since all it does is optimization, you could omit it for simplicity.

Back End

- First, the code generator reads an intermediate representation, and emits assembly-like code, the only difference being that it assumes a machine with infinite number of registers (or some other resource) for simplicity.

- Second, a register allocator juggles available registers on the CPU by deciding when a variable is to be stored in a register or in memory. This part has major impact on performance. Once it's done, the assembly-like code becomes true assembly.

- Then, an assembler reads the assembly and outputs machine code. Usually, the code still has some unresolved references, so the machine code is put inside an object file.

- Next, a linker satisfies every unresolved reference in an object file by linking it against a library and substituting a pointer to the resource being referred to inside the library. The linker also supplies necessary information to locate the relevant library when linking dynamically, or, when linking statically, some or all of a particular library might be embedded in the object file. The linker is sometimes thought of as being separate from the back end or even the compiler, but the truth is, the operation of a particular linker is specific to the target (or a family of targets) that it links for, so I included it in this category.

- Finally, the back end converts the object file with all references resolved into an executable by adding meta-data necessary for the Target's program loading mechanism to recognize the program, load it, dynamically link it if necessary, and run it.

Soon, I'll go over how to actually write each of those.